Apple Opens Private Cloud Compute for Security Research

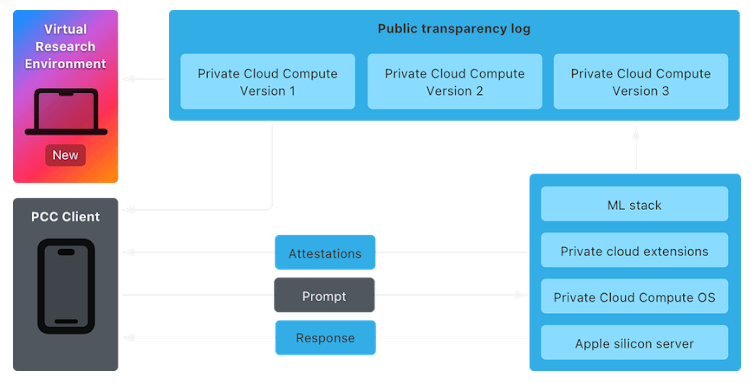

In June, Apple unveiled its Private Cloud Compute (PCC), promoting it as the “most advanced security system ever built for cloud AI computing on a large scale.” This innovation allows the processing of demanding AI tasks in the cloud while maintaining user privacy.

Now, Apple has made the PCC Virtual Research Environment (VRE) available to the public, inviting researchers to test its security and privacy features. Apple encourages security and privacy experts — or anyone with a technical background and curiosity — to explore PCC and verify its security claims.

Incentives for Security Researchers

To boost interest, Apple has expanded its Security Bounty program to include PCC, offering rewards ranging from $50,000 to $1,000,000 for uncovering security issues. This covers various vulnerabilities, such as server problems that might execute harmful code or exploits that could access users’ private data.

Tools for Analysis and Available Resources

The VRE equips Mac researchers with tools to examine PCC. It includes support for inference with a virtual Secure Enclave Processor (SEP) and paravirtualized graphics on macOS. Apple is also making parts of the PCC source code available on GitHub for further analysis, including projects like Thimble, CloudAttestation, splunkloggingd, and srd_tools.

“We developed Private Cloud Compute as part of Apple Intelligence to elevate AI privacy,” the company said. “It ensures verifiable transparency — a feature that sets it apart from other cloud-based AI systems.”

Growing Security Concerns in AI Systems

Deceptive Delight: Exploiting AI Chatbots

The field of generative AI continues to face new challenges, such as vulnerabilities that allow the manipulation of large language models (LLMs). One such attack, recently described by Palo Alto Networks, is known as “Deceptive Delight.” It tricks AI chatbots into bypassing their security by combining malicious and innocent queries. The process involves requesting the chatbot to connect different events logically, including restricted topics, to generate unintended responses.

ConfusedPilot: Poisoning Data for RAG-Based Systems

Another technique, known as the “ConfusedPilot” attack, targets Retrieval-Augmented Generation (RAG)-based AI systems, like Microsoft 365 Copilot. This method introduces seemingly harmless documents that contain specific strings to manipulate the AI’s data environment, potentially leading to misinformation and incorrect decisions.

ShadowLogic: Hidden Backdoors in Machine Learning Models

Lastly, the “ShadowLogic” attack enables attackers to plant “codeless” backdoors within machine learning models, such as ResNet, YOLO, and Phi-3. By altering the computational graphs of these models, attackers can trigger harmful behaviors in applications using these models. Unlike typical software backdoors that rely on executing code, these embedded backdoors are harder to detect and mitigate, making them a serious risk to AI supply chains.

By addressing these emerging threats, companies can better secure their AI systems and safeguard user data from potential exploits.