DeepSeek’s Database Leak Raises Security Concerns

AI Firm DeepSeek Exposed Sensitive Data Online

A major security lapse at the Chinese AI company DeepSeek recently came to light after researchers discovered an open database that could have allowed hackers to access sensitive information.

You might be interested in: Cyberattack Hits DeepSeek, Signups Restricted

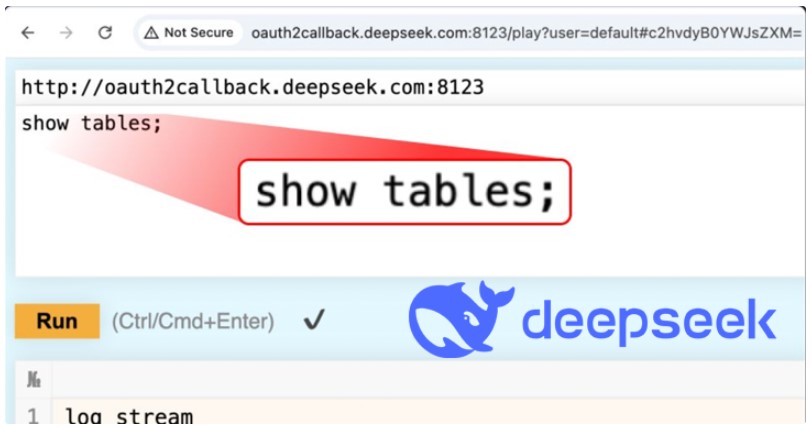

According to cybersecurity expert Gal Nagli from Wiz, the unprotected ClickHouse database gave anyone full control over its contents. This meant unauthorized individuals could access internal data, execute database commands, and possibly manipulate the system.

What Information Was at Risk?

The exposed database contained over a million lines of log data, including private conversation histories, secret keys, backend infrastructure details, and API credentials. Such information is highly sensitive and could have been exploited if it fell into the wrong hands.

The database, hosted on oauth2callback.deepseek[.]com:9000 and dev.deepseek[.]com:9000, did not require authentication, meaning that anyone with knowledge of the URLs could potentially gain access.

How Was the Database Exploited?

Hackers could use ClickHouse’s HTTP interface to send SQL queries directly through a web browser. This flaw made it possible for attackers to not only extract data but also escalate privileges within DeepSeek’s system. While it remains uncertain if any malicious actors exploited this vulnerability, the risk was significant.

After being contacted by Wiz, DeepSeek moved quickly to secure the database and fix the issue.

AI Security: A Growing Concern

Nagli emphasized that as AI tools gain popularity, security risks also increase. While discussions about AI threats often focus on futuristic dangers, real problems arise from basic security mistakes, like leaving databases exposed.

“Companies developing AI must put security first,” Nagli stated. “It’s essential that cybersecurity teams work closely with AI developers to ensure sensitive information remains protected.”

DeepSeek’s Rise and Challenges

DeepSeek has gained significant attention for its open-source AI models, which some claim rival OpenAI’s top-tier systems. The company’s reasoning model, R1, has even been described as “AI’s Sputnik moment.”

Its AI chatbot quickly became one of the most downloaded apps on both Android and iOS. However, due to “large-scale malicious attacks,” the company recently had to halt new user registrations. In a January 29, 2025, update, DeepSeek acknowledged the security issue and confirmed they were working on a solution.

Privacy and National Security Issues

DeepSeek is also facing scrutiny over its privacy policies and ties to China, raising concerns among U.S. national security officials.

In Europe, the Italian data protection authority, Garante, requested details on how DeepSeek collects and processes user data. Soon after, DeepSeek’s apps became unavailable in Italy. The Irish Data Protection Commission (DPC) has made similar inquiries, though it remains unclear if these actions were linked.

OpenAI and Microsoft Investigate DeepSeek

Reports from Bloomberg, Financial Times, and The Wall Street Journal suggest that OpenAI and Microsoft are investigating DeepSeek over allegations that it may have trained its AI models using OpenAI’s API without permission. This technique, known as “distillation,” involves extracting knowledge from another AI system’s outputs to replicate its capabilities.

A spokesperson from OpenAI commented, “We are aware that groups in China are actively trying to replicate advanced U.S. AI models using distillation techniques.”

Conclusion

DeepSeek’s recent data exposure serves as a wake-up call for AI companies to strengthen their security measures. With increasing scrutiny over data privacy, national security concerns, and allegations of unauthorized AI training, DeepSeek faces significant challenges ahead. How it handles these issues will determine its future in the AI industry.